AI is powerful. But there is no magic nor sentience. Whether it is the most powerful Generative AI model, or a simple linear regression model (that we all know and are familiar with), models all have fundamental limitations. Most of it comes from their underlying complexity.

Now that we have a sense of how to think of AI (from my Thinking in AI and Networks series), I would like to focus on its limitations.

For this note (which starts my Thinking in Risks series), I would like to try to frame AI limitations along three dimensions:

Uncertainty, Unexpectedness, and Unexplainability.

(No link, but there’s also the 3 ‘V’s for data - Volume, Variety and Velocity.)

I will try to define them as simply as I can, list some key techniques that could mitigate them, and then discuss how we can view traditional non-AI models (whether statistical, econometric or financial), classical machine learning models (such as gradient boosted trees), predictive deep learning models as well as generative deep learning models (i.e. Generative AI) through this lens.

I hope this helps the reader be clearer (if it was not already clear before) that we should never ever default to Generative AI (at least not with the current state of things) right away until we have a clear eyed view of things.

As with previous discussions on AI concepts in my notes, most of these ideas are not new to AI researchers or practitioners, but I hope articulating the way I think about them might be useful to readers who are not as familiar with AI's inherent challenges. The definitions as well as techniques to manage different AI risks are a dynamic area of research, and I may not be doing them justice, so fully welcome discussion and alternative perspectives on the framing and methods discussed below

The Three U's

Consider the three word suggestions your phone's keyboard shows as you type. When your phone confidently changes a correctly spelled word into something completely unrelated and contextually absurd. Seeing a post or an ad on your mobile app for something you were just chatting with your friend about.

These everyday experiences capture the essence of what we need to understand about AI’s limitations.

All AI models have uncertainty, some exhibit unexpected behaviors, and the degree to which we can explain their decisions varies.

Let's dive into each dimension.

Uncertainty: Known Unknowns

Think of uncertainty when predicting next week's sales figures. Sometimes the forecast is uncertain because customer behavior has natural randomness (e.g., unexpected viral trends or economic shifts), and sometimes it's uncertain because we don't have enough historical sales data or detailed customer demographics (we lack data).

This directly relates to the distribution of data the model is trained on. If your AI was trained on customer data from urban areas, it will naturally be more uncertain when making predictions about rural customers – it's operating outside its learned distribution.

Two Types of Uncertainty

Natural Randomness (Aleatoric Uncertainty) Let's call this "inherent data noise" – the randomness that exists in the real world that no amount of data can eliminate. Customer purchasing decisions on any given day have an element of randomness. Even with perfect information, we can't predict with 100% certainty if someone will choose chocolate or coconut ice cream today.

Knowledge Gaps (Epistemic Uncertainty) Let's call this "undertrained model" – uncertainty that arises because our model hasn't seen enough samples or is operating in unfamiliar territory. This is reducible with more data or better models.

Key Techniques to Address Uncertainty

Some techniques that could manage uncertainty:

Conformal Prediction Similar to political polls that say "45% ± 3%", this method provides guaranteed coverage – the true answer falls within the predicted range a specified percentage of the time.

Ensembles Training multiple models and combining their predictions – the AI equivalent of seeking second opinions from different specialists.

Bayesian Neural Networks Instead of giving you one answer, these models provide a range: "Sales will be between $1M-$1.5M with 90% confidence." Think of it as getting multiple expert opinions rather than one definitive answer.

Monte Carlo Dropout This technique is like asking 100 different experts and seeing how much they agree. High agreement means high confidence; disagreement signals uncertainty.

Do note that not all these techniques provide what we call calibrated probabilities (but let’s not go into that). Suffice to say it is useful to have some indicator to know how confident (or certain) a prediction is.

Unexpectedness: Unknown Unknowns

This is different from uncertainty. An uncertain AI might say "I'm 60% sure this transaction is fraudulent." An AI exhibiting unexpected behavior might confidently flag all transactions from a certain ZIP code as fraudulent because it discovered an unintended correlation in the training data.

Unexpectedness grows with model complexity. A simple linear regression predicting house prices has uncertainty but won't suddenly start valuing houses based on the number of vowels in the street name without you intending it to, or you not being able to tell from the model coefficients. A deep neural network, however, might discover and act on such spurious correlations, without you expecting it.

Examples of Unexpected Behavior

Emergent capabilities: ChatGPT solving problems it was never explicitly trained for

Gaming the system: The boat racing AI that learned to score points by going in circles forever instead of finishing races

Adversarial vulnerabilities: A tiny sticker making a self-driving car read a stop sign as "Speed Limit 45"

Hidden biases: The hiring AI that rejected resumes mentioning "women's" activities

Key Techniques to Address Unexpectedness

Red Teaming Like ethical hackers for AI – dedicated teams trying to break your system before deployment.

Alignment Training Ensuring AI pursues what we actually want, not just what we literally asked for. Includes techniques like Constitutional AI and RLHF.

Hallucination Mitigation Lowering the chance of AI confidently stating falsehoods through retrieval-augmented generation.

Robustness Testing Systematically testing on edge cases, out-of-distribution data, and adversarial examples.

Unexplainability: Knowing Why

Unexplainability is our inability to understand how an AI reached its decision. This isn't just an academic concern – it's crucial for trust. It’s related to both transparency and interpretability. Transparency is usually more about disclosure, while interpretability is usually about peering into the innards of models. But let’s treat explainability, interpretability and transparency in this note as understanding AI (and understanding is incidentally another ‘U’!)

Two Levels of Explainability

Global explanations: How the model works overall

Local explanations: Why it made this specific decision

The challenge? As models become more complex (and thus more prone to unexpected behavior), they also become harder to explain.

Key Techniques to Address Unexplainability

Counterfactual Explanations "Your loan was denied, but if your income were $10K higher, it would be approved." This tells users exactly what would need to change.

Feature Importance Methods (LIME, SHAP) Highlighting which inputs most influenced a decision – showing that "payment history" was the key factor.

Attention Visualization For neural networks, showing which parts of the input the model "focused on."

There are many many other such techniques.

Cross-Cutting Analysis Techniques

Several analysis methods can help address these limitations:

Sensitivity Analysis How it works: Change inputs systematically to see how outputs respond.

Uncertainty: Large output changes from small input changes indicate high uncertainty

Unexpectedness: Counterintuitive responses reveal unexpected behaviors

Unexplainability: Shows cause-and-effect relationships

Stability Analysis How it works: Test if similar inputs produce similar outputs.

Uncertainty: Instability indicates uncertainty regions

Unexpectedness: Unstable behavior patterns reveal unexpected model quirks

Unexplainability: More stable models may be easier to explain

Subpopulation Analysis How it works: Examine performance across different groups.

Uncertainty: Reveals where the model is less confident

Unexpectedness: Uncovers biases and group-specific behaviors

Unexplainability: Helps explain why models work differently for different populations

Error Analysis How it works: Systematically study failure patterns.

Uncertainty: Error clusters indicate uncertain regions

Unexpectedness: Unusual failure modes reveal blind spots

Unexplainability: Understanding errors helps explain limitations

Stress Testing How it works: Push models to extreme conditions.

Uncertainty: Quantifies confidence at boundaries

Unexpectedness: Reveals breaking points

Unexplainability: Clarifies operational limits

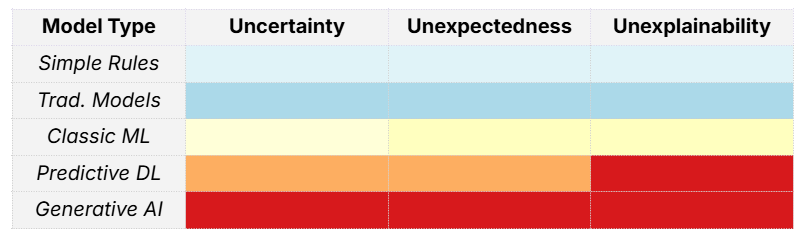

How The Three U's Vary Across Model Types

Traditional Models (Econometric, Financial, Statistical)

Think linear regression, ARIMA, logistic regression

Uncertainty: Well-understood through established statistical theory

Unexpectedness: Minimal – limited model capacity means limited surprises

Unexplainability: High explainability due to interpretable parameters

Classic Machine Learning (E.g., SVM, Gradient Boosted Decision Trees)

The workhorse of many business applications

Uncertainty: Techniques available to understand this (ensemble methods, calibration)

Unexpectedness: Moderate risk, mainly from feature engineering surprises

Unexplainability: Trees are generally interpretable, SVMs less so

Predictive Deep Learning

Neural networks for recommendation, forecasting, classification, regression

Uncertainty: Challenging but possible with techniques mentioned above, adapted for deep learning models

Unexpectedness: High risk from complex feature learning, but the limited range of prediction outputs makes it tractable

Unexplainability: Major challenge requiring specialized techniques

Generative AI

The new frontier

Uncertainty: Extremely challenging due to the unlimited permutations – how confident can AI be about creative or unbounded outputs?

Unexpectedness: Very high – emergent behaviors, hallucinations, jailbreaks

Unexplainability: Most challenging – billions of parameters creating novel content

Practical Implications

Understanding these three U's isn't academic – it can impact how we use AI:

All models have uncertainty, but complexity brings unexpectedness: While every model must grapple with uncertainty, complex models may surprise us more.

Choose your trade-offs wisely:

Need high certainty? Medical diagnosis

Need explainability? Loan decisions

Can tolerate unexpectedness? Creative applications

Layer your defenses: Use multiple techniques together. Uncertainty quantification + robustness testing + explainability tools = safer AI.

Match the model to the stakes:

High-stakes decisions → Simple, explainable models with well-quantified uncertainty

Creative tasks → Accept some unexpectedness but monitor for harmful outputs

Exploratory analysis → Complex models acceptable with human oversight

Build evaluation into your process: Don't just evaluate final performance – assess uncertainty calibration, test for unexpected behaviors, and verify explainability.

What challenges have you faced with uncertainty, unexpectedness, or unexplainability in your use of AI? Do you even agree with this characterization?

How do you balance these trade-offs in your organization?

This note is the start of the 'Thinking in Risks' series, building on the foundations laid in 'Thinking in AI' and 'Thinking in Networks'